Every organization operating in the present day seeks to base its operations on data. The majority of current modernization initiatives stop short of achieving their intended goals. CIOs from various industries share a common experience about how their organizations maintain large amounts of data that exist in separate, isolated systems, while their expensive data storage facilities lack complete trust, and their analytics initiatives produce numerous dashboards that fail to generate actionable decisions.

The truth is, the beginning of transformation occurs before reaching the stage of cloud or AI implementation. The process begins with data movement, structure, and usability. The cost of implementing new digital projects will rise, their execution time will lengthen, and their effectiveness will decrease when your data foundation stays outdated.

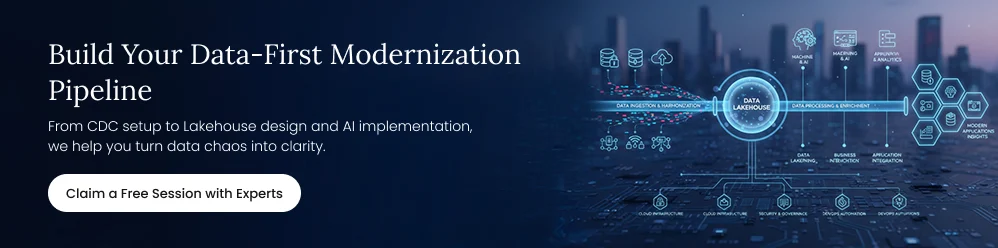

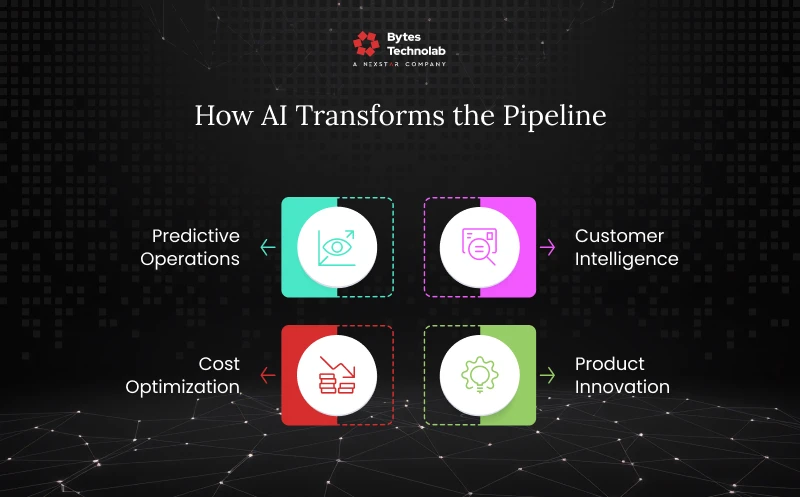

That’s where a data-first modernization pipeline makes all the difference. The combination of CDC (Change Data Capture), a Lakehouse architecture, and AI-driven intelligence creates a cycle where data flows continuously, decisions happen faster, and modernization efforts finally connect with measurable business outcomes.

At Bytes Technolab, we’ve seen this shift firsthand while helping enterprises and fast-growing startups rebuild their systems. As a product engineering and AI development company, our clients no longer ask “What’s the best analytics tool?” but “How do we make our data useful in real time?”

This article breaks down how the CDC → Lakehouse → AI pipeline works, why it delivers real ROI, and how CIOs can use it to modernize systems faster, without replacing everything at once.